The roots of Thermography, or heat differentiation, are ancient, dating back to the time of the pyramids. A papyrus from 1700 BC documents the association of temperature with disease.

By 400 BC, physicians commonly employed a primitive form of Thermography: they applied a thin coat of mud to a patient’s body, observed the patterns made by the different rates of mud drying, and attributed those patterns to hot and cold temperatures on the surface of the body. Hippocrates summed it up: “In whatever part of the body excess of heat or cold is felt, the disease is there to be discovered.”

The first attempt to measure heat came in the second century AD with the development of a bulb “thermoscope” by Hero of Alexandria. In the late 1500s, Galileo invigorated the science of measuring temperature by converting Hero’s thermoscope into a crude thermometer. Others followed over the centuries, developing more sophisticated devices and introducing improvements which have become standard today — for example, the mercury thermometer and the use of Fahrenheit and Celsius scales to measure temperature.

A breakthrough in Thermology, as it was then called, came in 1800 with a major discovery by Sir William Hershel, King George III’s Royal Astronomer. Experimenting with prisms to separate the various colours of the rainbow, Hershel discovered a new spectrum of invisible light which we now know as infrared, meaning “below the red.” As a natural effect of metabolism, humans constantly release varying levels of energy in the infrared spectrum, and this energy is expressed as heat. Hershel’s discovery made it possible for devices to focus on measuring infrared heat from the human body.

Modern thermometry began soon after, in 1835, with the invention of a thermo-electrical device which established that the temperature in inflamed regions of the body is higher than in normal areas. This device also confirmed that the normal healthy human temperature is 98.6° Fahrenheit or 37° Celsius.

By the 1920s, scientists were using photography to record the infrared spectrum, and this led to new applications in thermometry and other fields. The 30s, 40s, and 50s saw remarkable improvements in imaging with special infrared sensors, thanks in large part to World War II and the Korean conflict, which used infrared for a variety of military applications, such as troop movement detection. Once these infrared technologies were declassified post-war, scientists immediately turned to researching their application for clinical medicine.

In the 60s, large amounts of published research and the emergence of physician organizations dedicated to the use of thermal imaging, such as the American Academy of Thermology, brought about greater public and private awareness of the science.

By 1972, the Department of Health, Education and Welfare announced that Thermography, as it had become known, was “beyond experimental” in several areas, including evaluation of the female breast.

On the heels of this, efforts to standardize the field began in earnest, aided by the arrival of mini-computers in the mid-70s, which provided colour displays, image analysis, and the great benefits of image and data storage — and eventually faster communication over the Internet.

By the late 70s and early 80s, detailed standards for thermography were in place, and new training centres for physicians and technicians were graduating professionals who would make medical thermography available to the general public.

In 1982, the Federal Drug Commission (FDA) approved

medical thermography for use “where variations of skin temperatures might occur.”

In 1988, the US Department of Labor introduced coverage for thermography in Federal Workers’ Compensation claims. These and other milestones — including a brief period when Medicare covered the use of thermography — encouraged the expansion of thermography training resources and launched a dramatic refinement of imaging devices over the next few decades.

Thermography Today

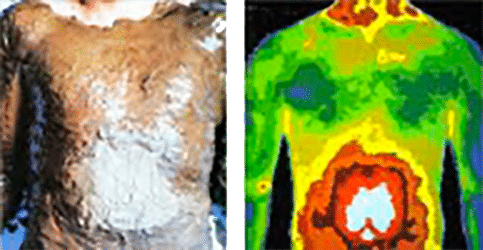

Today, modern systems provide high speed, high resolution imaging coupled with state-of-the-art computerized digital technology. This results in clear, detailed images captured by certified technicians for qualified physicians to interpret. Thermography is now recognized and valued as a highly refined science with standardized applications in Neurology, Vascular Medicine, Sports Medicine, Breast Health, and many other specialty areas.

At Thermography Medical Clinic, we call thermography a “Health Discovery Tool” and we consider it to be a very important part of a preventive wellness program. By accurately measuring temperature regions and identifying thermographic patterns, thermography measures inflammation, often long before symptoms may be felt or eventually diagnosed with an actual disease. In other words, thermography sees your body asking for help.

The increasing consensus on the role of inflammation in disease development was recently discussed in these statements:

Inflammatory responses and inflammation-associated diseases in organs

Why You Should Pay Attention to Chronic Inflammation

Healthier is Smarter!

Sharon Edwards – BA, R(Hom), DNM, RNCP, CTT