An amazing advancement in the medical field is always likely to have a rich history behind it and thermography is no exception! Have you ever wondered how thermography came to be about? Here is a timeline of thermography to give a little insight of how far this fantastic medical tool has come!

The Beginnings

The basic roots of thermography date back even to the time of the pyramids. In 1700 BC, a papyrus documented the association of heat with disease– the root of what modern thermography helps us understand today! By the time we reached 400 BC, physicians were using mud to observe the heat in a patient’s body. They would apply a thin layer of mud and watch to see which areas on the body the mud would dry faster than others. They then would attribute the rates of the mud drying to hot and cold temperatures on the surface of the body.

“In whatever part of the body excess of heat or cold is felt, the disease is there to be discovered.” – Hippocrates

The Creation of the Thermometer

The first attempt to accurately measure heat began in second century AD when the Hero of Alexandria created his bulb “thermoscope.” In the late 1500 Galileo improved upon the “thermoscope” by developing a crude thermometer. As time continued, several other’s continued using Galileo’s thermometer improving upon it until we finally developed our standard for reading thermometers today.

The Discovery of Infrared Light

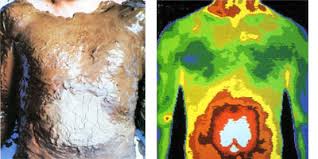

In 1800, a breakthrough in Thermology was discovered by Sir William Hershel, King George III’s Royal Astronomer. While experimenting with prisms to separate the various colors of the rainbow, Hershel discovered a brand new spectrum of visible light that had not been seen before: infrared light. Due to the natural effect of metabolism in the human body, we are constantly releasing varying levels of energy in the infrared spectrum and it’s this energy that is expressed as heat in our bodies. It’s Hershel’s amazing discovery that allowed devices to be able to measure infrared heat in the human body.

The Start of Modern Thermometry

It was 35 years after Hershel’s discovery – in 1835 – that modern thermometry began with the invention of a thermo-electrical device. This device established the standard that the temperature in inflamed regions of the body are higher than in normal areas. It was also through this device that we were able to set another standard: that the temperature of a normal healthy human body 98.6º F or 37º C.

Wartime Improvements for Thermometry

The 1920s brought about the use of photography to record the infrared spectrum which led to the use of thermometry in other fields, such as the war. During the 30s, 40s, and 50s thermometry saw massive improvements in imaging with special infrared sensors. During World War II and the Korean Conflict, infrared was used for a variety of military applications, such as troop movement detection. Once the infrared technologies were declassified post-war– they were once again turned to the original reason for their application: clinical medicine.

The Emergence of Thermography

The 60s was a time where large amounts of research was published and many physician organizations were beginning to show their dedication to the use of thermal imaging. Organizations such as American Academy of Thermology, introduced this science on a greater public and private scale. By the time we reached 1972 the Department of Health, Education and Welfare declared thermography as “beyond experimental” in multiple areas, including the evaluation of the female breasts.

In the mid-70s, mini computers were created which provided color displays, image analysis, and the great benefits of image and data storage. And once we made it to the late 70s / early 80s the detailed standards of thermography had been established. Training centers for physicians and technicians were turning out professionals who would make medical thermography available to the general public!

Medical Thermography is FDA Approved

In 1982, the Federal Drug Commission (FDA) approved medical thermography for use “where variations of skin temperatures might occur.” And in 1988, the US Department of Labor introduced coverage for thermography in Federal Workers’ Compensation claims. These milestones and a few others encouraged the expansion of thermography training resources and started a massive refinement of imaging devices for the decades that were to come.

Modern Thermography Today

Thanks to the state-of-the-art technology that is available today, certified technicians are able to capture clear, detailed images that are able to be interpreted by qualified physicians. Thermography is without a doubt recognized and valued as highly refined science with standardized applications in many fields such as: Neurology, Vascular Medicine, Sports Medicine, and Breast Health.

Here at Lisa’s Thermography and Wellness we are excited by the rich history and journey of medical thermography and look forward to the advancements to come in the future. We truly believe thermography is one of the greatest breakthroughs in medical history and strongly stand behind what it can do for our patients.